System Design Basics

Learn the basics of system design.

Published At

7/20/2022

Reading Time

~ 16 min read

Basic introduction to the system design. These are notes which I took while learning about system design.

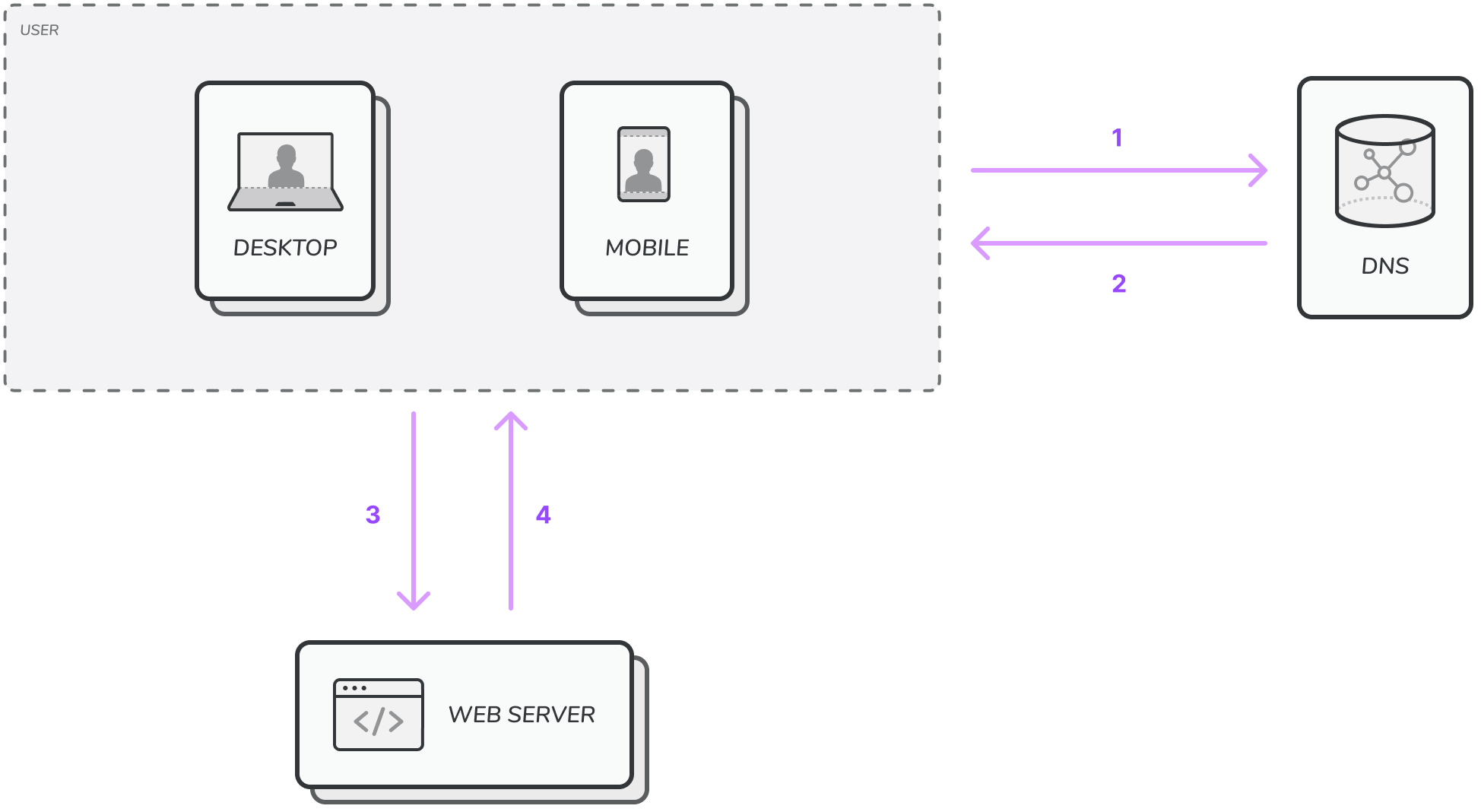

Single Server Setup

To start with a single server.

Here, request flow is:

- When user types in URL and hit enter, request goes to DNS server with URL.

- DNS server provide the IP address of that URL.

- Once browser gets IP address, it hits the IP address, now the request goes to server on that IP address.

- Server analyzes the request and accordingly send back the response.

DNS is a paid service provided by third parties and do not host on our servers.

🤷♂️

Database

With the growth of users, one server is not enough, and we need multiple servers.

- One for web/mobile traffic

- One for Database

So that they can scale independently.

Databases are of two types

- Relational Databases

- Non Relational Databases

| Relational Databases | Non Relational Databases |

|---|---|

| SQL Databases | NO SQL Databases |

| Can be used when data is structured | When Data is non Structured |

No SQL can grouped into four categories

- Key Value Stores (Redis)

- Graph Stores (Neo4j)

- Column Stores (Cassandra)

- Document Stores (S3)

Non-Relational Databases might be the right choice if:

- Your application needs super low latency.

- Your data is unstructured, or you do not have any relational data.

- You only need to serialise and deserialize data (JSON, XML, YAML, etc).

- You need to store massive amount of data.

💾

Scaling

Scaling is of two types:

- Vertical Scaling

- Horizontal Scaling

Vertical Scaling refers to scale-up, which means adding more power to your server (CPU, RAM, etc.).

- When traffic is low, it’s a great option

- Simplicity is its great advantage

Though vertical scaling has some hard limits

- It’s impossible to add unlimited CPU and memory to a single server

- Vertical scaling does not have failover and redundancy. If a server goes down, your product goes down with it completely.

Horizontal Scaling refers to scale-out, which allows you to scale by adding more servers to your pool of resources.

It is more desirable for large-scale applications due to the limitation of vertical scaling.

📐

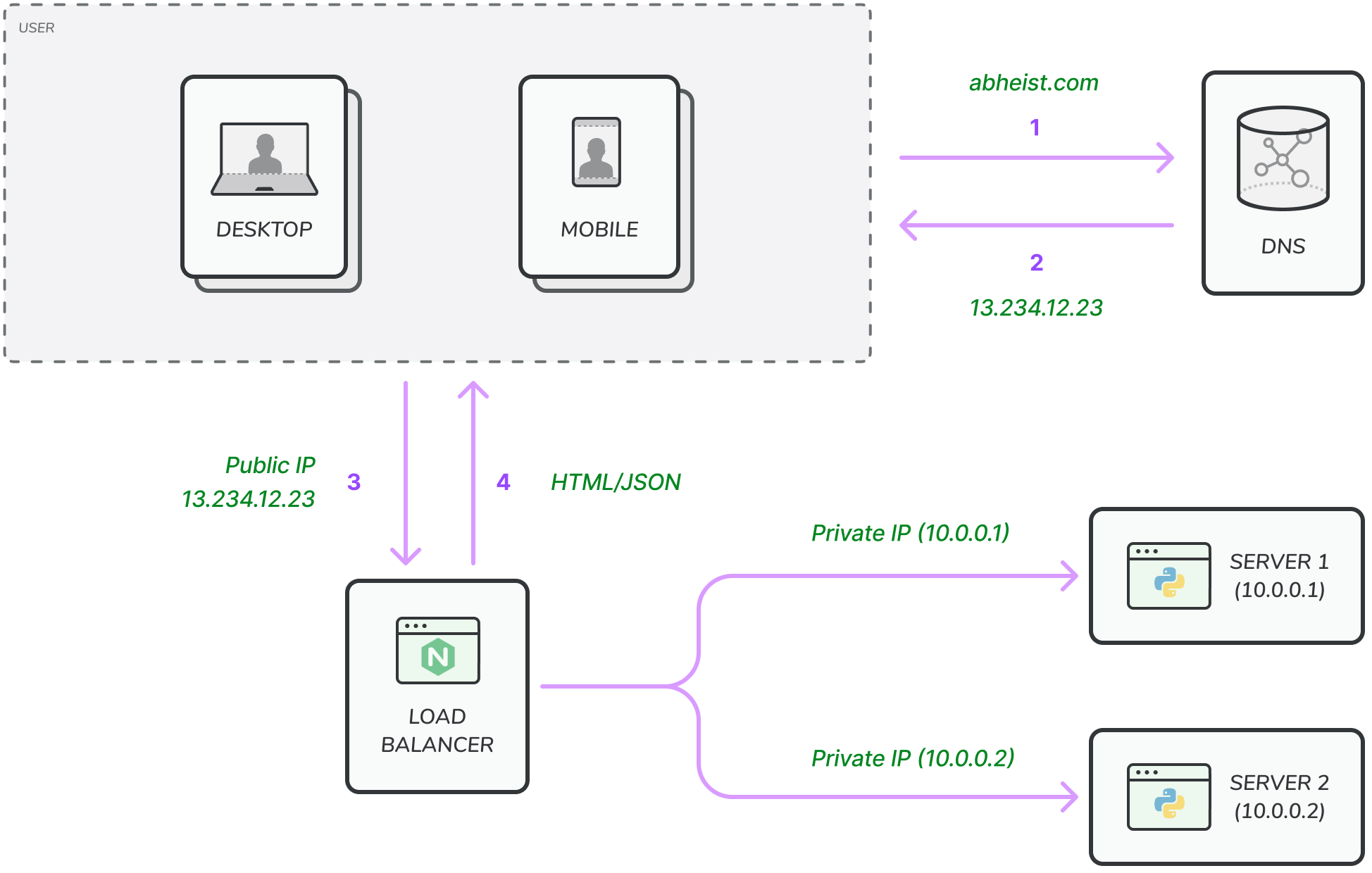

Load Balancer

If many users access the server simultaneously, the server reaches the web server load limit, and users will experience a slower response or fail to connect to the server. A load balancer is the best technique to address these situations.

A load balancer evenly distributes incoming traffic among web servers defined in a load-balanced set.

User Connects to public IP of load balancer directly. Load balancer communicate with web servers with private IPs. Private IP and servers are not directly accessible to public for better security. Private IPs are only used for communication between servers on same network.

After adding load balancer, we solved the fail-over issue of the server. If one server is down, other will handles the requests and if two servers are unable to handle enough traffic, we can add more servers to the web server pool and load balancer automatically starts sending the request to new server as well.

⚖️

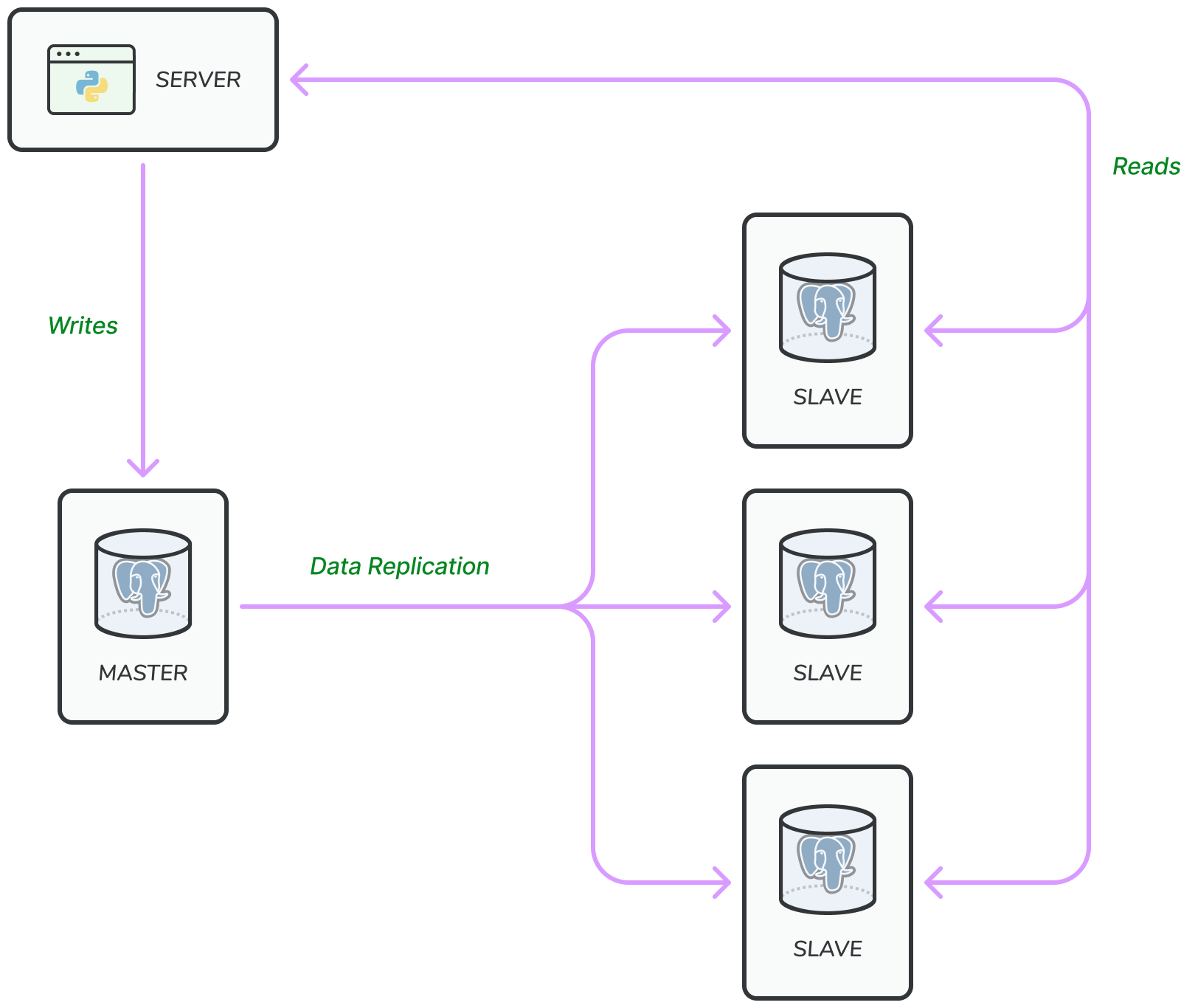

Data Replication

According to Wikipedia: Database replication can be used in many database management systems. Usually with a master/slave relationship between the original(master) and the copies(slaves).

- The master only supports write operation.

- The slave database gets copies of the data from the master and only supports read operation.

All the database modifying commands must be sent to the master database.

The most application requires a much higher ratio of reads than writes. Thus, the number of slave databases in the system is usually larger than the master databases.

Advantages of Database Replication

- Better Performance: In master slave model, all writes and updates happen in master node whereas read operations are distributed across slave nodes. This mode improves performance because it allows more queries to be processed in parallel.

- Reliability: If one of your database server is destroyed by a natural disaster (earthquake, etc) data is still preserved.

- High Availability: By replicating data across different locations, your website remains in operation even if a database is offline as you can access data stored in another server.

What if database goes offline

If one slave database is available, and it goes offline, then read operations will be directed to master database temporarily.

As soon as the issue is detected, a new slave database will replace the old one.

In case multiple slave database are available, read operations are redirected to other healthy slave database, and a new database slave will replace the old one.

💾 💾

Cache

A cache is a temporary storage area that stores the result of expensive responses or frequently access data in memory so that subsequent requests are served more quickly.

Every time a new web page loads, one or more database calls are executed to fetch data. The application is greatly affected by calling the database repeatedly. The cache can mitigate that problem.

Cache Tier

Cache tier is a temporary data store layer much faster than the database. The benefits of having a separate cache tier include:

- Better system performance

- Ability to reduce database workloads

- Ability to scale the cache tier independently

Considerations for using cache

-

A cache server is not ideal for persisting data when a database is read frequently but infrequently modified since cache data is stored in volatile memory.

-

Expiration policy: It’s a good practice to set when the data expires. When not set, data will store in memory permanently.

- Not too short: Have to reload data too frequently.

- Not too long: Data will be stale.

-

Consistency: This involves keeping the data store and cache in sync. Inconsistency can happen because data modifications on the data store and cache are not in a single transaction. When scaling across multiple regions, maintaining consistency between the data store and cache is challenging.

-

Mitigating failures: A single cache server represents a potential Single Point Of Failure (SPOF)

- A Single Point of Failure is a part of the system that, if it fails, will stop the entire system from working.

- As a result, multiple cache servers across different data centers are recommended to avoid SPOF. Another approach is to over-provision the required memory by a certain percentage. This provides a buffer as memory usage increases.

-

Eviction Policy: Once the cache is complete, any request to add to the store might cause existing items to be removed. This is called cache eviction. Least Recently Used (LRU) is the most famous cache eviction policy. Other eviction policies are:

- Least Frequency Used (LFU)

- First in First Out (FIFO)

Any of them can be adopted according to the application use cases.

🍜

Content Delivery Network

CDN is a network of geographically dispersed servers used to deliver static content. CDN servers cache static content like images, videos, CSS, JS files, etc.

Dynamic Content Cache: It enables the caching of HTML pages based on request path, query strings, cookies, and request headers.

How it works:

When a user visits a website, a CDN server closest will deliver static content.

Considerations of using CDN

- Cost: Since third parties run it, it will cost you.

- Setting upon appropriate cache expiry, not too long, not too short.

- CDN fallback: If CDN fails/outrages, the client side should be able to detect the problem and request resources from the origin.

Invalidating files

You can remove a file from CDN before it expires by following operations:

- By using API provided by the vendor

- By using object versioning to serve a different version of the object, we can add parameters to the URL, such as version number.

- Web servers no longer serve static assets (JS, CSS, images, etc.). They are fetched from the CDN for better performances.

- The caching data lightens database load.

🌐

Stateless Web Tier

Now it is time to consider scaling the web tier horizontally. For this, we need to move the state out of the web tier ( for instance, user session data). A good practice is to store session data in persistent storage such as a relational database or NoSQL. Each web server from the cluster can access state data from databases. This is called a stateless web tier.

Stateful architecture

A stateful server and a stateless server have some key differences. A stateful server remembers client data (state) from one request to another. A stateless servers keep no state information.

With stateful, the issue is that every request from the same client must be routed to the same server. This can be done with sticky sessions in most load balancers. However, this adds the overhead. Adding or removing servers is much more difficult with this approach. It is also challenging to handle server failures.

Stateless architecture

HTTP requests can be sent to any server which fetches data from data storage. A stateless system is more straightforward, more robust, and scalable.

After the state data is removed from the web server, auto-scaling of the web tier is easily achieved by adding or removing the server based on traffic load.

🕸️

Data Centers

In normal operations, users are geo DNS routed, also known as geo-routed, to the closest data center. GeoDNS is a DNS service that allows domain names to be resolved to IP addresses based on the location of a user.

In any significant data center outrage, we direct all traffic to a healthy data center.

Several technical challenges must be resolved to achieve a multi-datacenter setup:

- Traffic redirection: Effective tools are needed to direct traffic to the correct data center. GeoDNS.

- Data synchronization: Users from different regions could use different local databases or caches. In failover cases, traffic might be routed to a data center where data is unavailable. A common strategy is to replicate data across multiple data centers.

- Test & deployment: With a multi-datacenter setup, it is important to test your website/application at different locations. Automated deployment tools are vital to keeping services consistent through all data centers.

🏢

Message Queue

A message queue is a durable component stored in memory that supports asynchronous communication. It served as a buffer and distributed asynchronous requests. The basic architecture is simple.

Decoupling makes the messages queue a preferred architecture for building scalable and reliable applications. With the message queue, the producer can post a message to the queue when the consumer is unavailable to process it. The consumer can read messages from the queue even when the producer is unavailable.

Consider the following use case: your application supports photo customization, including cropping, sharpening, blurring, etc. Those customization tasks take time to complete in the following figure. Web servers publish photo processing jobs to the message queue. Photo processing workers pick up jobs from the message queue and perform photo customization tasks asynchronously. A producer and consumer can be scaled independently. When the queue size becomes large, more workers are added to reduce the processing time. However, if the queue is empty most of the time, the member of workers can be reduced.

🐑

Logging, Metric, and Automation

Logging

Monitoring error logs are essential as they help to identify errors and problems in the system. You can monitor error logs at the server level or use tools to aggregate them to a centralized service for easy search and viewing.

Metrics

Collecting different types of metrics help us to gain business insights and understand the system's health status. Some of the following are useful.

- Host level metrics: CPU, memory, disk I/O, etc.

- Aggregate level metrics: E.g., the performance of the entire database tier, cache tier, etc.

- Key business metrics: Daily active users, retention, revenue, etc.

Automation

When the system gets bugs and is complex, we need to build or leverage automation tools to improve productivity. Continuous Integration is a good practice in which each code check-in is verified through automation. Allow teams to detect problems early. Besides, automating your build, tests, deploy process, etc., could improve developer productivity significantly.

Adding message queues and different tools

- A design includes a message queue, which helps to make a system more loosely coupled and failure resilient.

- Logging: monitoring, metrics, and automation tools are included.

📊

Database Scaling

Vertical scaling

Also known as scaling up, is the scaling done by adding more power to an existing machine (CPU, RAM, Disk, etc.).

There are some powerful database servers. You can get DB servers which 24TB of RAM and that powerful DB can handle lots of data.

However, vertical scaling comes up with some serious drawbacks

- You can add more CPU, RAM, etc. But these are some hardware limits. A single server is not enough if you have a large user base.

- Greater risk of Single Point of Failure (SPOF).

- The overall cost of vertical scaling is high. Powerful servers are much more expensive.

Horizontal scaling

Also known as Sharding, it is a practice of adding more servers. Sharding separates large databases into smaller ones, more easily managed parts called shards. Each shard shares the same schema, though the actual data on each shard is unique to the shard.

Data can be partitioned into shards in many ways. User data can also be allocated to the database server based on user

ID. Anytime you access data, a hash function is used to find the corresponding shard. In our example user_id % 4 is

used as a hash function. If the request equals 0. Shard 0 is used to store & fetch data. If the result is equal to 1,

shard 1 is used. The same logic applied to another shard.

Sharding key: The most important factor to consider when implementing a sharding strategy is the choice of

sharding key. The sharding key (also known as a partition key) consists of one or more columns that determine how data

is distributed. user_id is the sharding key in the previous example.

Sharding is a great technique to scale the database, but it is far from a perfect solution. It introduces complexities and new challenges to the system.

Resharding data: it’s needed when

- Single shard could not hold data due to rapid growth.

- Certain shards might experience shard exhaustion faster than others due to uneven data distribution. When shard exhaustion happens, it requires updating a sharding function and moving data around.

- Consistent Hashing: is commonly used to solve this problem.

Celebrity Problem: This is also called the hotspot key problem. exercise access to a specific shard could only cause server out-load. Imagine data for Katy Perry, Justin Beiber, and Lady Gaga all end up in the same shard. For social media, that shard will be overwhelmed with the reading operation. To solve this problem, we may need to allocate a shard for each celebrity. Each shard might need a function partition.

Join and de-normalization: Once a database has been sharded across multiple servers, it is hard to perform join operations across database shards. A common workaround is to denormalize the database so that queries can be performed in a single table.

🙆♂️

Millions of Users and Above

Scaling a system is an iterative process. Iterating on what we have learned in this chapter could get us far. More fine-tuning and new strategies are needed to scale beyond millions of users.

E.g., You might need to optimize your system and decouple the system to even smaller services. All the techniques learned in this chapter should provide a good foundation to take on new challenges.

How we can scale to support millions of users:

- Keep our web tier stateless

- Build redundancy at every tier

- Cache data as much as you can

- Support multiple data centers

- Host static assets at CDN

- Scale your data tier by sharding

- Split tier into individual services

- Monitor your system and use automation tools

🏗

Do you have any questions, or simply wish to contact me privately? Don't hesitate to shoot me a DM on Twitter.

Have a wonderful day.

Abhishek 🙏